projects

LLM Post-training & Alignment

powering Apple Intelligence

Worked on Large Language Models(LLM) Post-training and Alignment for areas like Summarization, Text-Generation powering products used by billions everyday.

read more: Apple Intelligence

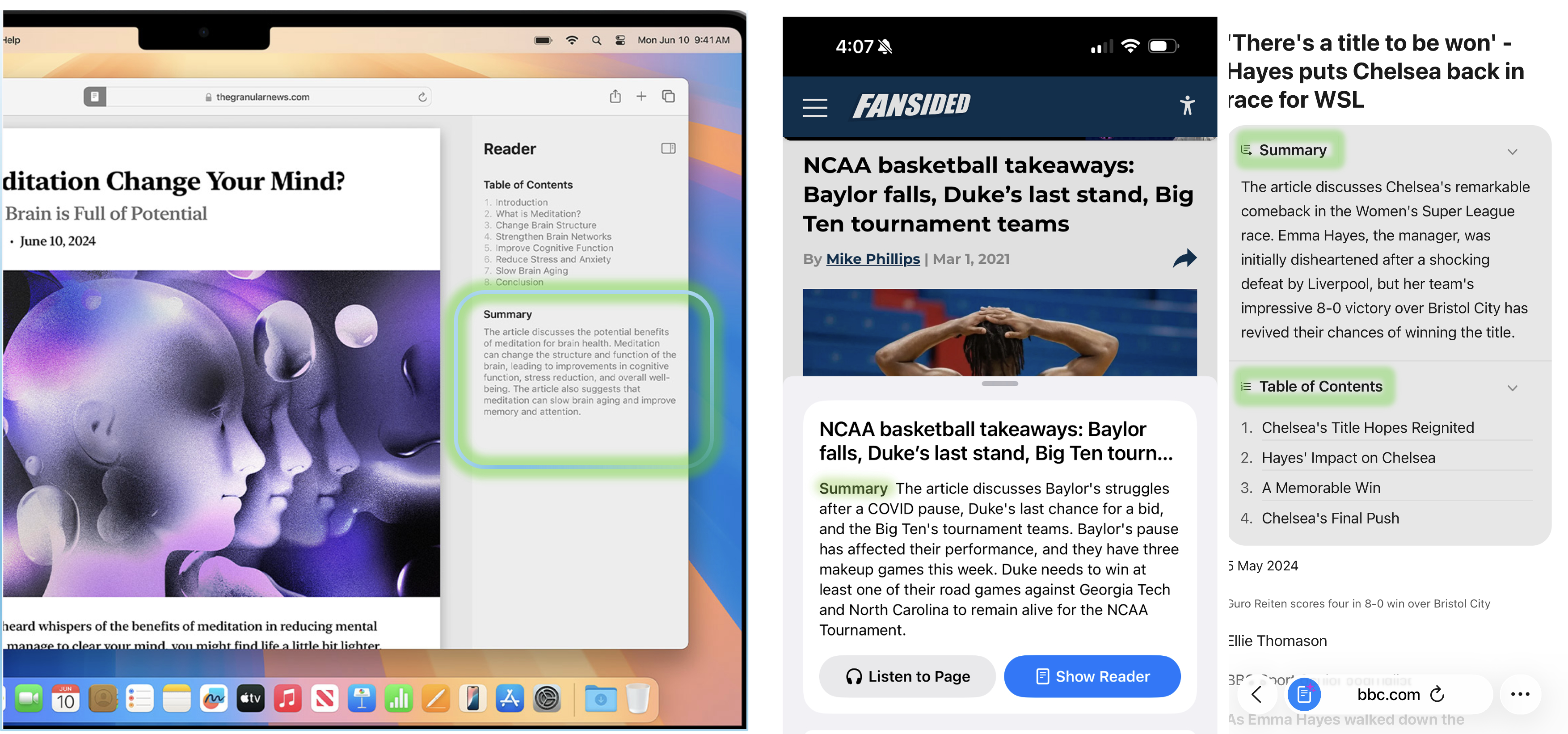

Smart Browsing Assistant

Web-scale Summarization and ToC generation

Led efforts for development of Large Language Models(LLMs) powering AI features in products used by billions everyday like Safari web browser.

Project launched at Apple WWDC2024.

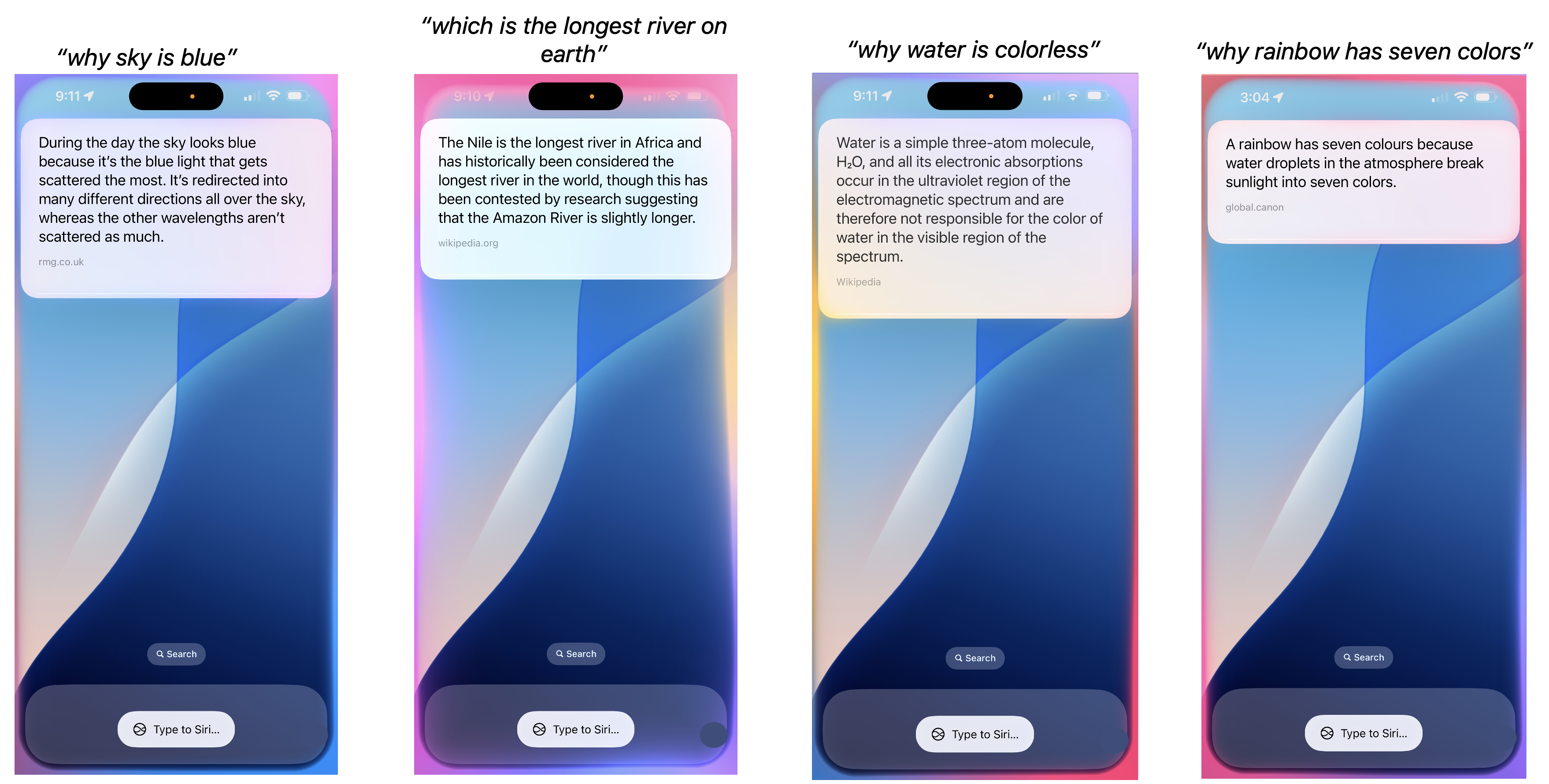

Open-domain Question Answering

Answering all your Questions

Worked on developing ML/NLP models to serve most relevant Answer to your Question and ensure Siri answers are based on most Authoritative sources.

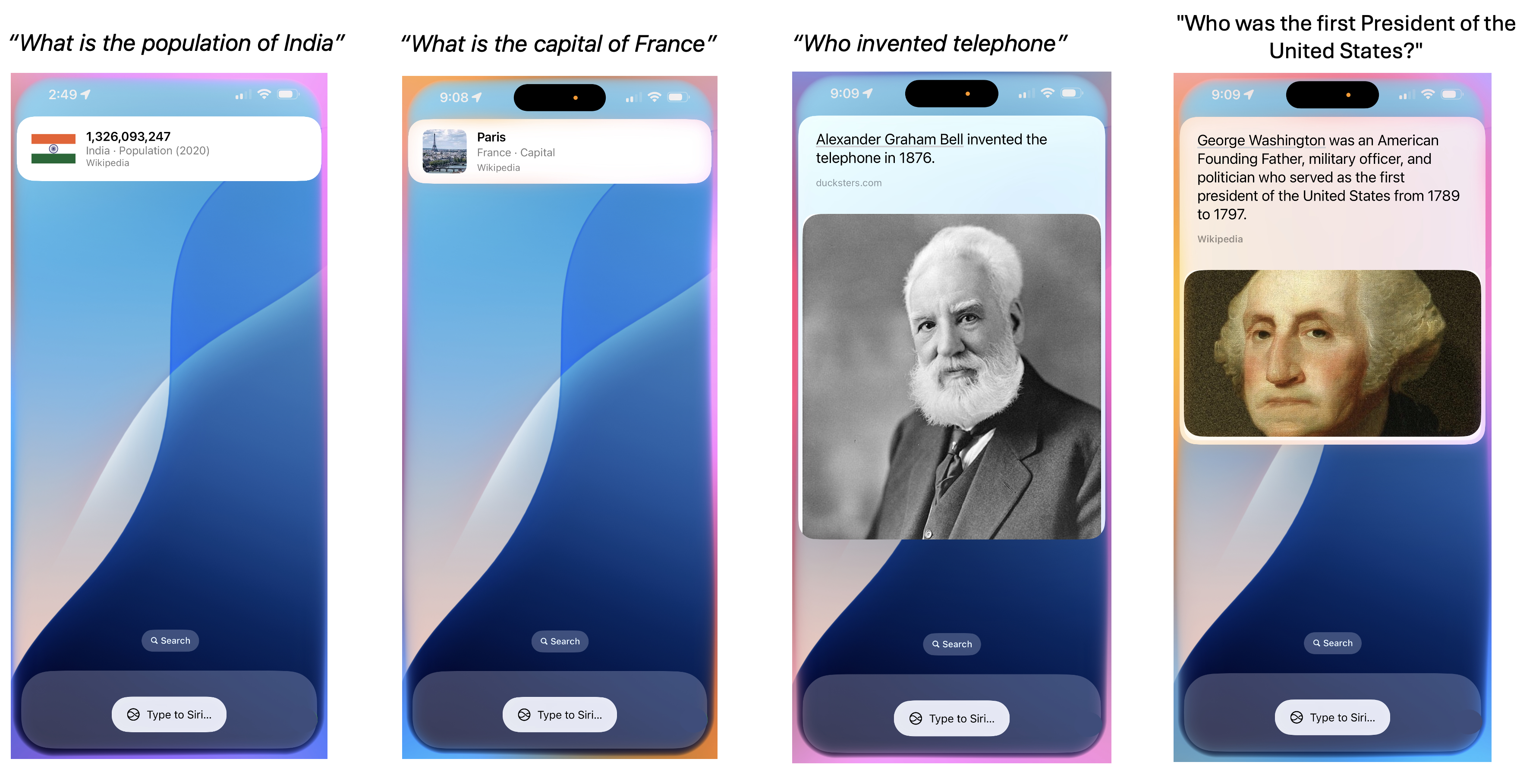

Natural Language Understanding (NLU) for Question Answering

Developed NLU models for Question-Answering in Siri

Developed NLU models for answering knowledge seeking questions to Siri.

Specifically, worked on Multi-task Neural models for NLU.

SmartCompose

Led Neural Language Generation efforts powering different Microsoft products.

Joint work with Chris Quirk, Peter Bailey and others at Microsoft AI Research

Developed and shipped a Deep Learning powered text-generation-feature to automatically complete what user is writing in Microsoft products.

Specifically, developed Neural Language Models for reranking and text generation- using contextual signals.

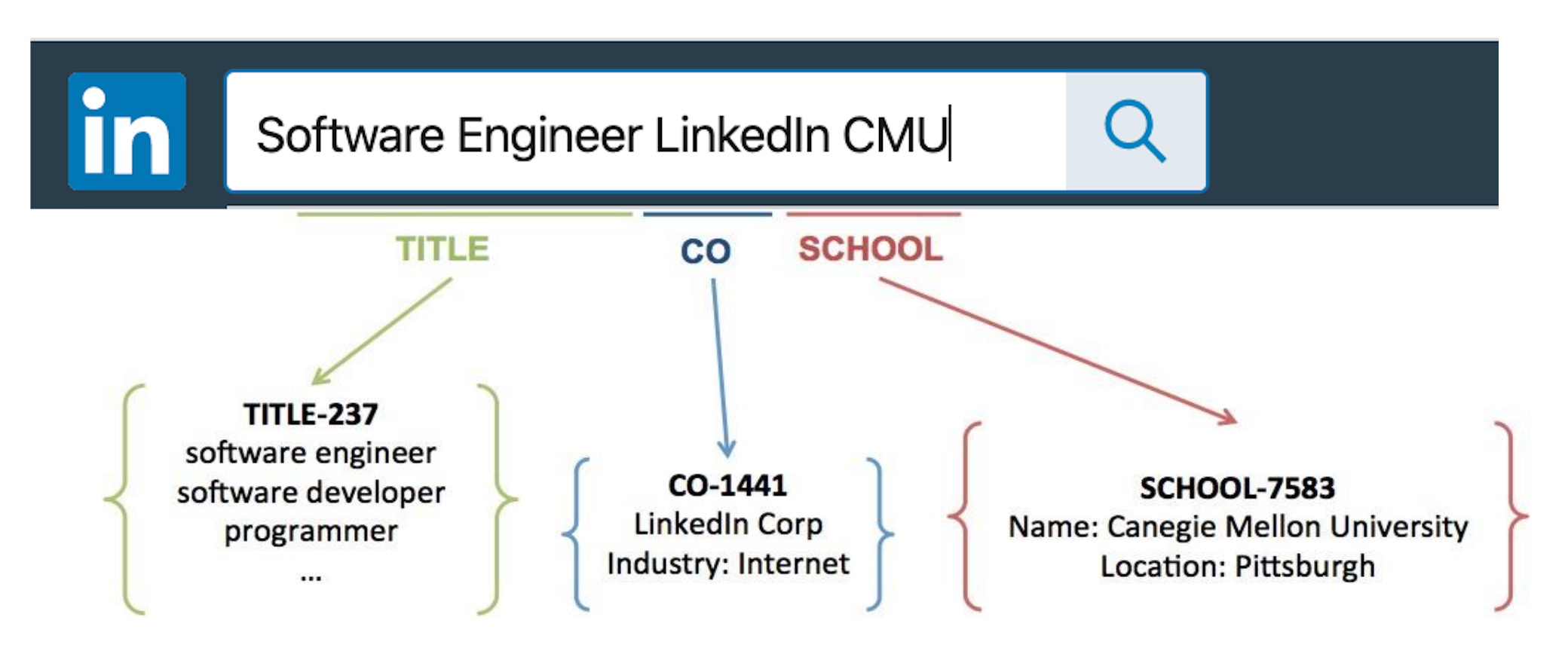

Query Understanding for LinkedIn Search

Led efforts to make Query Understanding more intelligent

Empowering users to issue Search in natural language.

Bing Knowledge Graph - Satori

|

|

Developed a NLP framework powering Bing's Knowledge Graph (Satori) that is helping it grow by selectively ingest knowledge from the web

Joint work with Silviu Cucerzan at Microsoft AI Research

Machine Learned Ranking for Microsoft Bing and other products

|

|

|

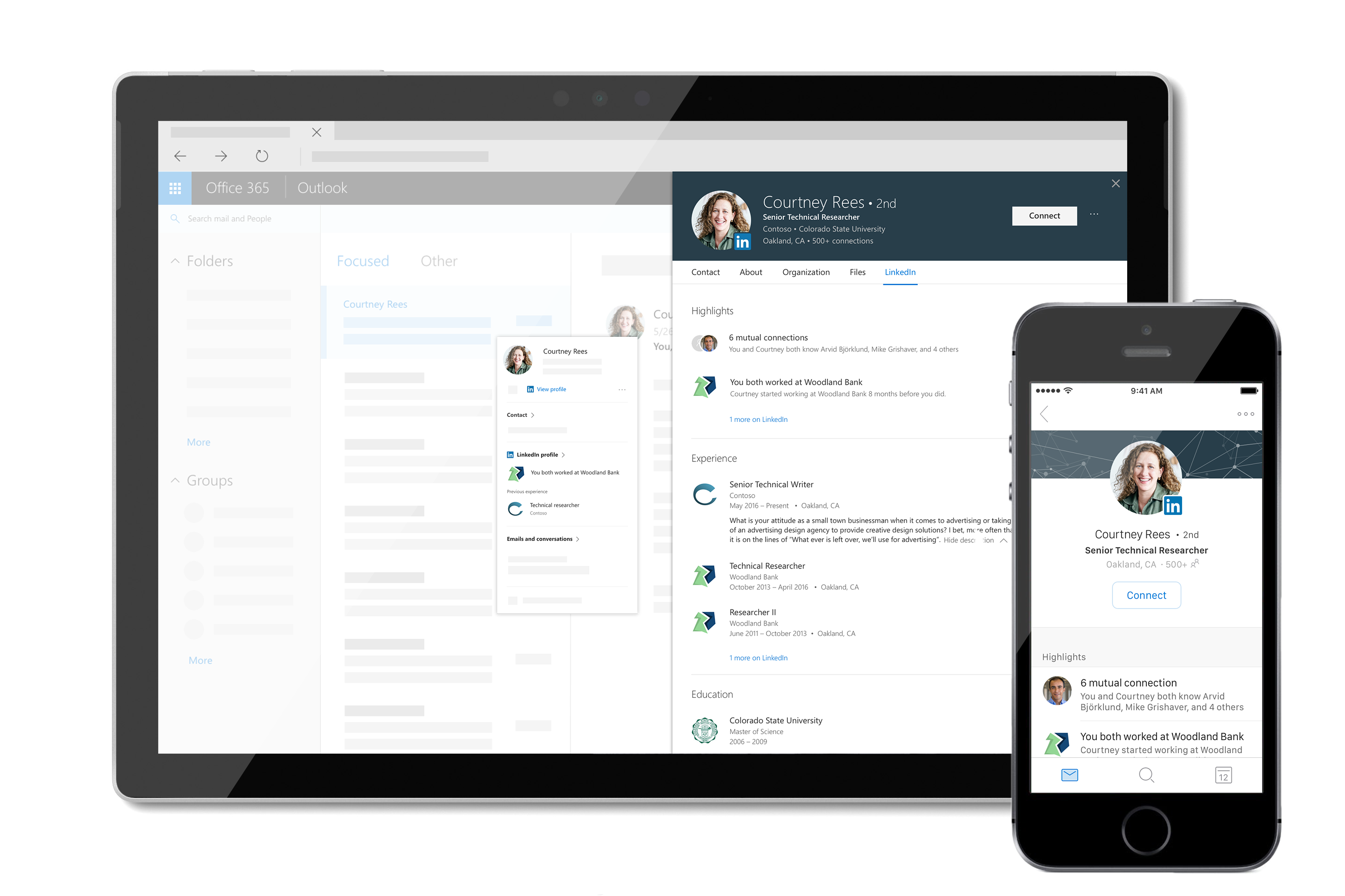

Developed and shipped ML rankers for Bing and Office 365 products

You can learn more about one of the project here:

https://blog.linkedin.com/2017/september/250/adding-linkedin_s-profile-card-on-office-365-offers-a-simple-way

CMU Never-Ending-Language-Learner(NELL)

Learning to read the web

Natural Language Processing framework to detect glosses from large web corpus like Wikipedia and ClueWeb. The core of the framework is based on the filters,

transformations, parsers, feature extractors, samplers and modelers in easy-to-use extensible framework design. This enriches NELL's knowledge.

Worked with Prof. William Cohen as advisor during my time at Carnegie Mellon University. You can read more about this project at: http://rtw.ml.cmu.edu/rtw/

One Laptop per Child

Open source contributor for OLPC laptop's sugar desktop environment

Sugar Desktop Environment is being developed for One

Laptop

Per Child project in collaboration with SugarLabs. My goal was to develop Sugar Activities that makes learning experience fun on XO laptops.

As part of this effort, I have developed to:

- Wikipedia Hindi - Wikipedia in Hindi for Sugar.

- DevelopWeb - it is an Activity for Web Development using which children can develop Web Sites through HTML, Javascript and other web technologies. Children can learn quickly how to develop web pages in a step by step approach through examples provided for each HTML component.

- Oopsy is a Sugar activity that will allow children to develop C/C++ programs, compile them and execute them to learn, explore and have fun!

- Project Bhagmalpur : worked with Anish Mangal and Dr. Sameer Verma and Gonzalo Odiard to deploy XSCE school server at Bhagmalpur, India - mainly to empower kids with access to internet and useful tool to access knowledge readily.

Download: https://activities.sugarlabs.org/en-US/sugar/addon/4632